随时关注《生信技能树》B站的我,马上就看到了一个全新NGS组学视频课程《microRNA-seq数据分析实战演练》,还等什么呢,当然是follow起来啦!

首先是下载相关数据库

需要下载前体与成熟miRNA,看视频的时候就可以先下载参考序列啦,目前版本仍然是271个物种的38589miRNA序列。

mkdir mirna

mkdir reference

nohup wget ftp://mirbase.org/pub/mirbase/CURRENT/hairpin.fa.gz &

nohup wget ftp://mirbase.org/pub/mirbase/CURRENT/mature.fa.gz &

gunzip hairpin.fa.gz

gunzip mature.fa.gz

cat hairpin.fa|grep '>'|awk '{print $3,$4}' |sort|uniq -c|wc -l

#265

cat mature.fa|grep '>'|awk '{print $3,$4}' |sort|uniq -c|wc -l

#265

为撒只有265,不是271个物种么?后面再去想。。。、

统计fasta长度

主要思路是多行换为1行计算。我也不懂下面的perl代码,但是感觉好神奇。其它编程语言都是可以达到同样的目的!

##前体长度

awk '/^>/ {printf("\n%s\t",$0);next;} {printf("%s",$0);} END {printf("\n");}' < hairpin.fa | tr "\t" "\n"|grep -v '>'| awk '{print length}'|uniq -c|sort -n -k2

###分布从39-2354

##成熟体长度

awk '/^>/ {printf("\n%s\t",$0);next;} {printf("%s",$0);} END {printf("\n");}' < mature.fa | tr "\t" "\n"|grep -v '>'| awk '{print length}'|uniq -c|sort -n -k2

###15-34

综述与应用的文章自己去看,我也偷个懒,毕竟这个miRNA-seq数据项目我是没有接触的, 仅仅是学习而已。

miRNA芯片探针

aligent与affy比较出名,它们都有自己的miRNA芯片。

例子GSE143564

miRNAseq

2020年最新的流程

先搭建环境:

因为软件都是常用软件,包括fastQC,bowtie,hisat2等,所以这里就不赘述了。

构建索引

现需要构建miRNA库的索引,使用前面下载好的数据库文件:

###注意的是要把U转换成T

## Homo sapiens

perl -alne '{if(/^>/){if(/Homo/){$tmp=1}else{$tmp=0}};next if $tmp!=1;s/U/T/g if !/>/;print }' hairpin.fa > hairpin.human.fa

perl -alne '{if(/^>/){if(/Homo/){$tmp=1}else{$tmp=0}};next if $tmp!=1;s/U/T/g if !/>/;print }' mature.fa > mature.human.fa

# 这里值得一提的是miRBase数据库下载的序列,居然都是用U表示的,也就是说就是miRNA序列,而不是转录成该miRNA的基因序列,而我们测序的都是基因序列。

nohup bowtie-build hairpin.fa hairpin.fa &

nohup bowtie-build mature.fa mature.fa &

注意:bowtie与bowtie2 的区别,参考引用自https://blog.csdn.net/soyabean555999/article/details/62235577/ bowtie有1和2的差別:

1,bowtie 1出現的早,所以對於測序長度在50bp以下的序列效果不錯,而bowtie2主要針對的是長度在50bp以上的測序的。

2,Bowtie 2支持有空位的比對

3,Bowtie 2支持局部比對,也可以全局比對

4,Bowtie 2對最長序列沒有要求,但是Bowtie 1最長不能超過1000bp。

5、Bowtie 2 allows alignments to [overlap ambiguous characters] (eg Ns) in the reference. Bowtie 1 does not.

6,Bowtie 2不能比對colorspace reads.

…………

使用bowtie-build命令构建的索引结果如下

ls -lh|cut -d ' ' -f 5-

# 5.9M Jul 20 11:02 hairpin.fa

# 7.5M Jul 21 18:06 hairpin.fa.1.ebwt

# 322K Jul 21 18:06 hairpin.fa.2.ebwt

# 341K Jul 21 18:06 hairpin.fa.3.ebwt

# 643K Jul 21 18:06 hairpin.fa.4.ebwt

# 7.5M Jul 21 18:06 hairpin.fa.rev.1.ebwt

# 322K Jul 21 18:06 hairpin.fa.rev.2.ebwt

# 3.7M Jul 20 11:03 mature.fa

# 7.6M Jul 21 18:06 mature.fa.1.ebwt

# 94K Jul 21 18:06 mature.fa.2.ebwt

# 430K Jul 21 18:06 mature.fa.3.ebwt

# 187K Jul 21 18:06 mature.fa.4.ebwt

# 7.6M Jul 21 18:06 mature.fa.rev.1.ebwt

# 94K Jul 21 18:06 mature.fa.rev.2.ebwt

可以看到每个fq文件会被bowtie-build构建后有6个索引。

数据下载

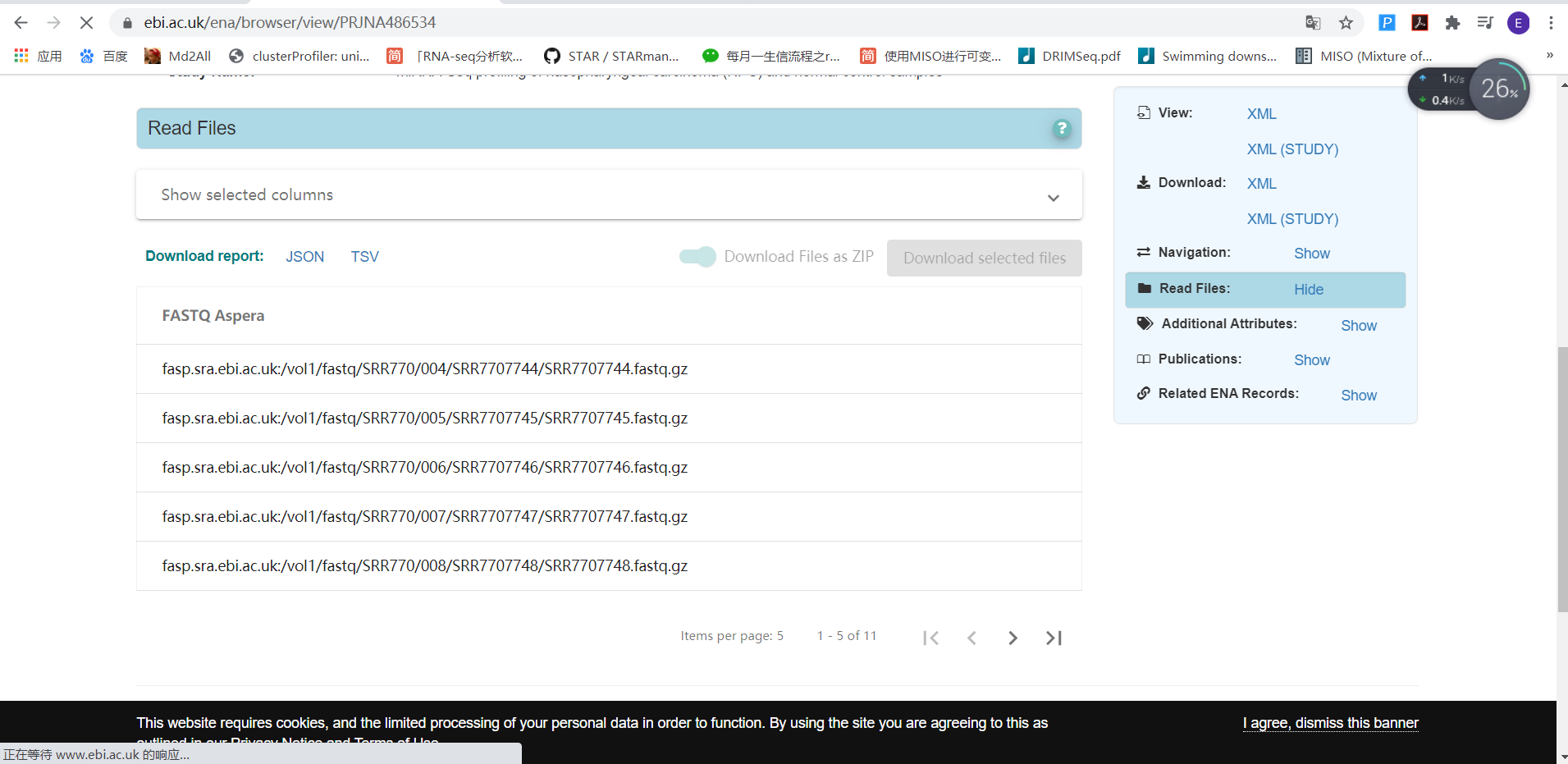

这里我直接选用18年文章的数据啦,其实方法是差不多的。在ebi下载,项目号是Project: PRJNA486534,共11个样本。

用aspera下载,方法跟下载转录组方法一致。

cat new.txt |tr ";" "\n"|sed 's/\///'|sed 's/^/era-fasp@/g'

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/004/SRR7707744/SRR7707744.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/005/SRR7707745/SRR7707745.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/006/SRR7707746/SRR7707746.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/007/SRR7707747/SRR7707747.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/008/SRR7707748/SRR7707748.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/009/SRR7707749/SRR7707749.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/000/SRR7707750/SRR7707750.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/001/SRR7707751/SRR7707751.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/002/SRR7707752/SRR7707752.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/003/SRR7707753/SRR7707753.fastq.gz

# era-fasp@fasp.sra.ebi.ac.uk:vol1/fastq/SRR770/004/SRR7707754/SRR7707754.fastq.gz

cat new.txt |tr ";" "\n"|sed 's/\///'|sed 's/^/era-fasp@/g' >id1.txt

###bulk

cat> wget.sh

cat id1.txt | while read id

do

ascp -QT -l 300m -P33001 -i /home/xlfang/.aspera/connect/etc/asperaweb_id_dsa.openssh $id ./

done

cat wget.sh #check command

nohup bash wget.sh & #no hang up

结果如下,可以看到测序数据量并不大,这样的数据量其实个人电脑都可以处理的哦:

ls -lh|cut -d " " -f 5-

273M Jul 21 19:17 SRR7707744.fastq.gz

294M Jul 21 19:18 SRR7707745.fastq.gz

307M Jul 21 19:18 SRR7707746.fastq.gz

257M Jul 21 19:19 SRR7707747.fastq.gz

262M Jul 21 19:20 SRR7707748.fastq.gz

323M Jul 21 19:21 SRR7707749.fastq.gz

332M Jul 21 19:21 SRR7707750.fastq.gz

311M Jul 21 19:22 SRR7707751.fastq.gz

318M Jul 21 19:23 SRR7707752.fastq.gz

331M Jul 21 19:23 SRR7707753.fastq.gz

333M Jul 21 19:24 SRR7707754.fastq.gz

质控

这里Jimmy老师用的是fastx_toolkit进行clean与trim,所以我也用这个,反正是学习嘛。

conda install -c bioconda fastx_toolkit

fastqc -t 2 -o ../2.fastq_qc /zju/phf5a/mirna/1.raw/*.fastq.gz

multiqc ./*zip -o ./2.fastq_qc

###trim+clean

cat> fastx.sh

ls *.gz|while read id

do

echo $id

zcat $id|fastq_quality_filter -v -q 20 -p 80 -Q 33 -i - -o tmp ;

fastx_trimmer -v -f 1 -l 27 -m 15 -i tmp -Q33 -z -o ${id%%.*}_clean.fq.gz ;

fastqc -t 2 -o ../2.fastq_qc ${id%%.*}_clean.fq.gz

done

结果如下

ls -lh *_clean.fq.gz|cut -d " " -f 5-

174M Jul 21 20:13 SRR7707744_clean.fq.gz

160M Jul 21 20:19 SRR7707745_clean.fq.gz

121M Jul 21 20:25 SRR7707746_clean.fq.gz

163M Jul 21 20:31 SRR7707747_clean.fq.gz

169M Jul 21 20:38 SRR7707748_clean.fq.gz

198M Jul 21 20:44 SRR7707749_clean.fq.gz

200M Jul 21 20:51 SRR7707750_clean.fq.gz

189M Jul 21 20:57 SRR7707751_clean.fq.gz

193M Jul 21 21:04 SRR7707752_clean.fq.gz

203M Jul 21 21:11 SRR7707753_clean.fq.gz

203M Jul 21 21:18 SRR7707754_clean.fq.gz

可以看到基本只有100-200m,说明去除了很多序列,或者说每个序列剪切了很多碱基。具体是什么情况呢,可以去深入探索,亲爱的读者们如果时间比较多就试试看哈。

比对

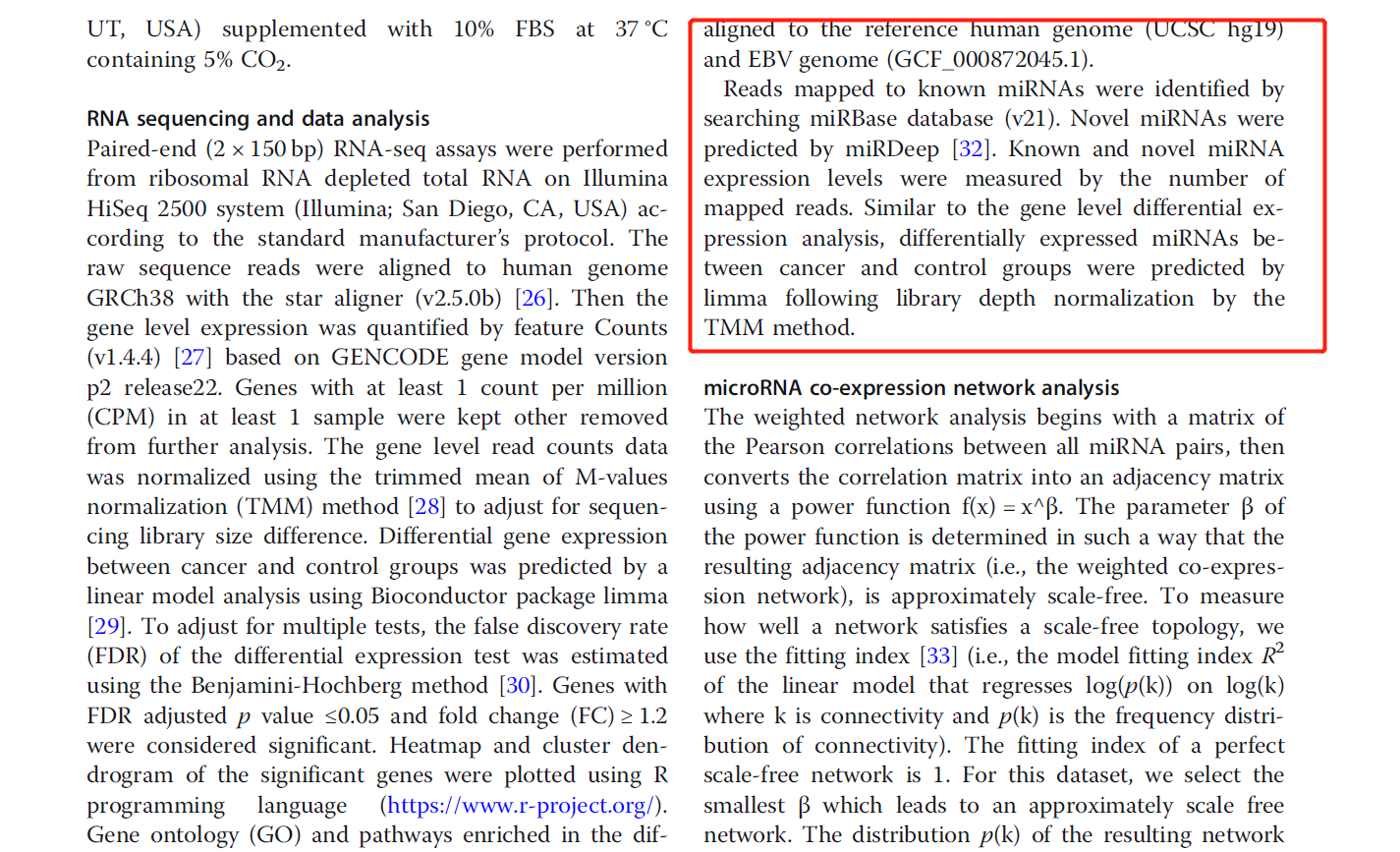

比对有两种:与mir库比对,以及与参考基因组比对。根据原文与TCGA的pipelinehttps://academic.oup.com/nar/article/44/1/e3/2499678。**一般文章都是2种都比对了。参考基因组进行比对+miRBase的gff来count或者miRBase来进行map miRNA** ,有一些文章只用了第一种。

参考基因组

一般来说,看看文献使用的是什么,我们就follow那个流程即可,可以看到下面截图的文章比对了很多哦:

不仅仅是miRNA

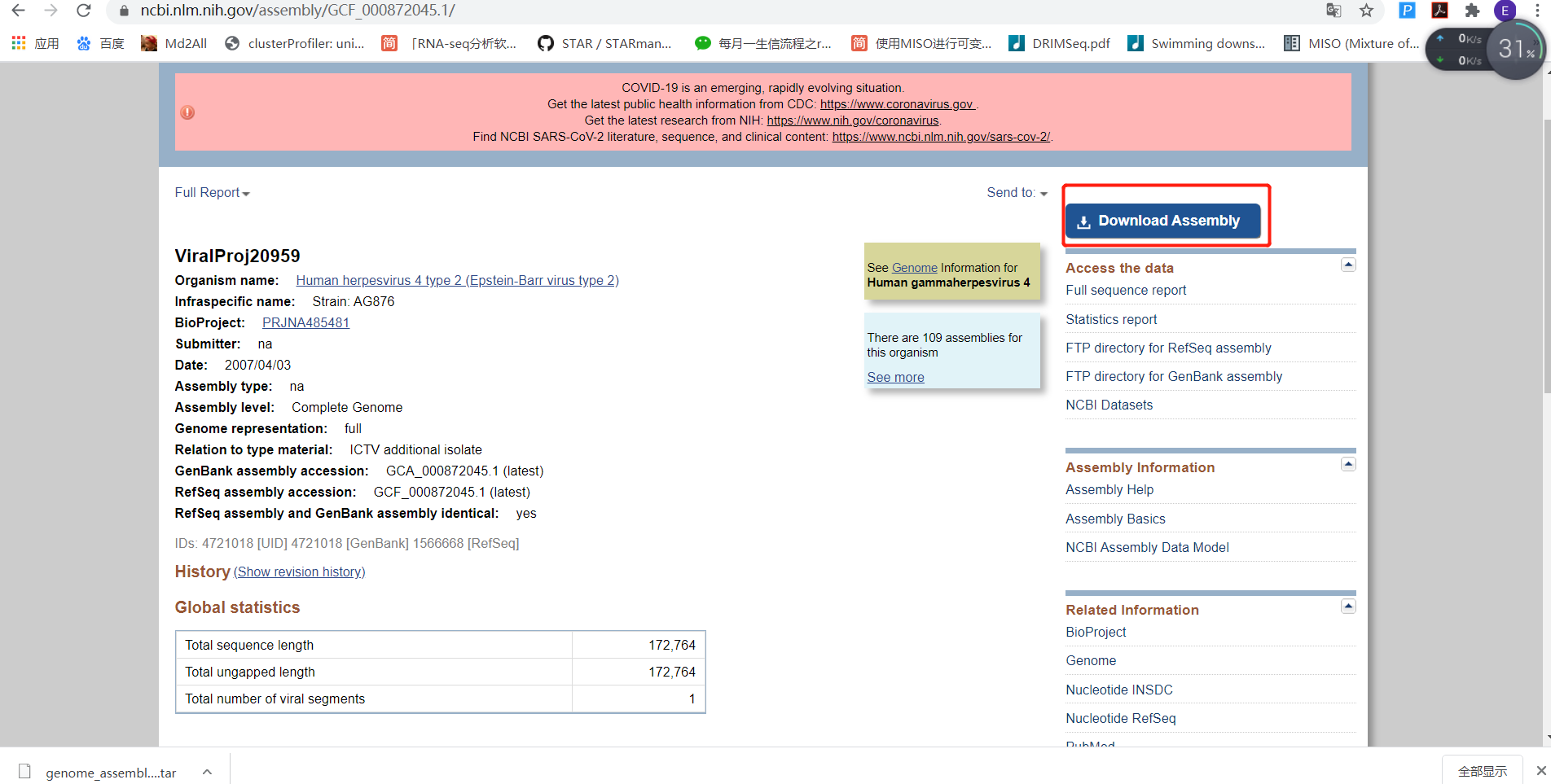

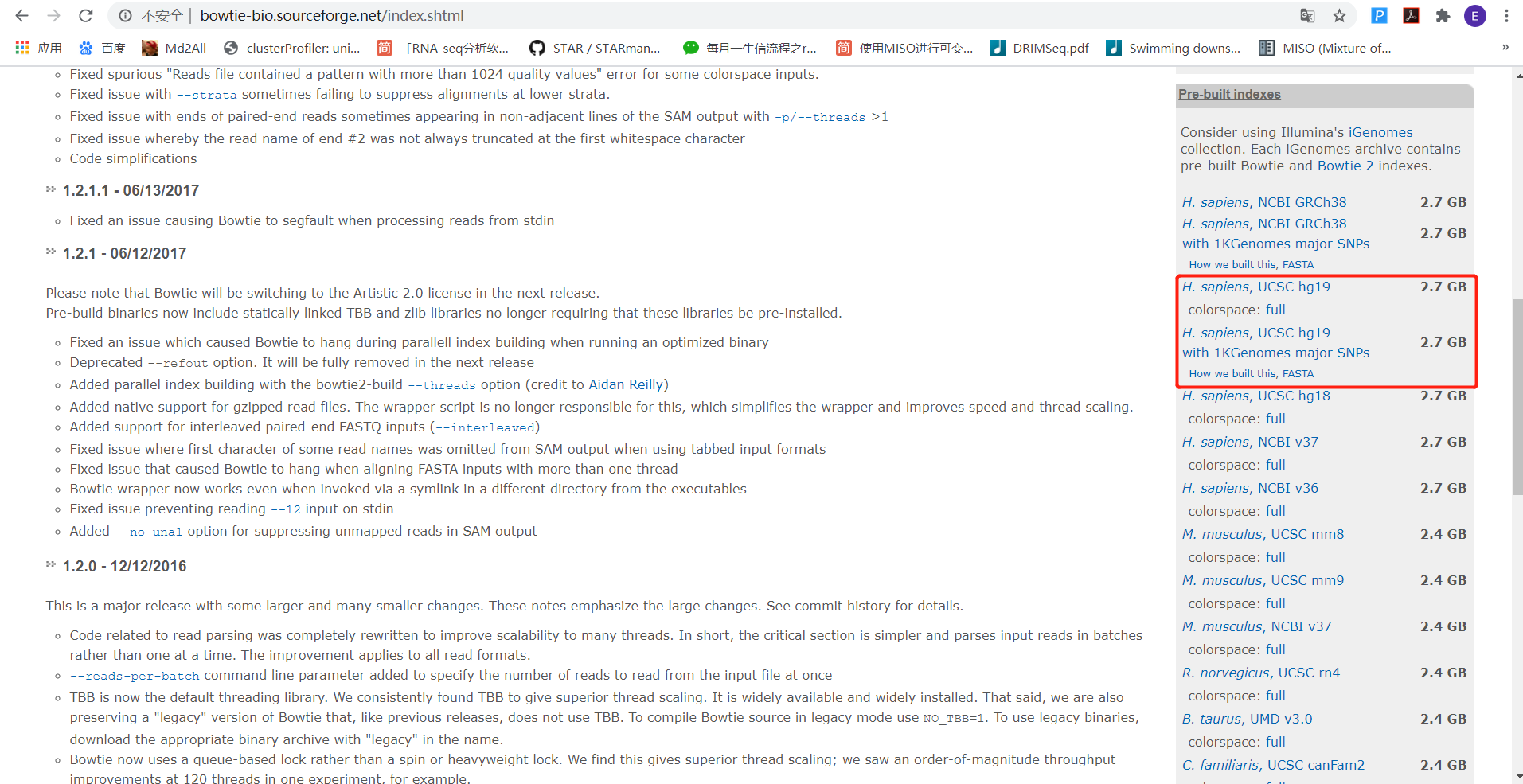

文章选用UCSC的hg19与NCBI的EBV的基因组https://www.ncbi.nlm.nih.gov/assembly/GCF_000872045.1/,下载就好了。

EBV的基因组可以在NCBI下载,hg19我直接用的官网构建的index

nohup bowtie-build GCF_000872045.1_ViralProj20959_genomic.fna.gz EBV.fa &

nohup unzip hg19.ebwt.zip &

ls -lh hg19*|cut -d " " -f 5-

# 784M Nov 14 2009 hg19.1.ebwt

# 342M Nov 14 2009 hg19.2.ebwt

# 3.3K Nov 14 2009 hg19.3.ebwt

# 683M Nov 14 2009 hg19.4.ebwt

# 784M Nov 14 2009 hg19.rev.1.ebwt

# 342M Nov 14 2009 hg19.rev.2.ebwt

ls -lh EBV.fa* |cut -d " " -f 5-

# 4.1M Jul 22 12:42 EBV.fa.1.ebwt

# 22K Jul 22 12:42 EBV.fa.2.ebwt

# 17 Jul 22 12:42 EBV.fa.3.ebwt

# 43K Jul 22 12:42 EBV.fa.4.ebwt

# 4.1M Jul 22 12:42 EBV.fa.rev.1.ebwt

# 22K Jul 22 12:42 EBV.fa.rev.2.ebwt

conda activate rna

###比对到基因组与病毒组

cat > align.bash

index=/zju/phf5a/mirna/reference/hg19

ls *_clean.fq.gz| while read id

do

echo $id

bowtie -p 2 $index $id -S tmp ;

samtools view -bS -@ 2 tmp -o ${id}_genome.bam

done

###报告如下

cat nohup.out

# SRR7707744_clean.fq.gz

# reads processed: 13812663

# reads with at least one reported alignment: 2152362 (15.58%)

# reads that failed to align: 11660301 (84.42%)

# Reported 2152362 alignments

# SRR7707745_clean.fq.gz

# reads processed: 12243879

# reads with at least one reported alignment: 1631629 (13.33%)

# reads that failed to align: 10612250 (86.67%)

# Reported 1631629 alignments

# SRR7707746_clean.fq.gz

# reads processed: 8307240

# reads with at least one reported alignment: 1148081 (13.82%)

# reads that failed to align: 7159159 (86.18%)

# Reported 1148081 alignments

# SRR7707747_clean.fq.gz

# reads processed: 13017092

# reads with at least one reported alignment: 2614253 (20.08%)

# reads that failed to align: 10402839 (79.92%)

# Reported 2614253 alignments

# SRR7707748_clean.fq.gz

# reads processed: 13335526

# reads with at least one reported alignment: 2494180 (18.70%)

# reads that failed to align: 10841346 (81.30%)

# Reported 2494180 alignments

# SRR7707749_clean.fq.gz

# reads processed: 12855713

# reads with at least one reported alignment: 1834882 (14.27%)

# reads that failed to align: 11020831 (85.73%)

# Reported 1834882 alignments

# SRR7707750_clean.fq.gz

# reads processed: 13245630

# reads with at least one reported alignment: 3043002 (22.97%)

# reads that failed to align: 10202628 (77.03%)

# Reported 3043002 alignments

# SRR7707751_clean.fq.gz

# reads processed: 12667220

# reads with at least one reported alignment: 1996596 (15.76%)

# reads that failed to align: 10670624 (84.24%)

# Reported 1996596 alignments

# SRR7707752_clean.fq.gz

# reads processed: 13173414

# reads with at least one reported alignment: 1721895 (13.07%)

# reads that failed to align: 11451519 (86.93%)

# Reported 1721895 alignments

# SRR7707753_clean.fq.gz

# reads processed: 13408954

# reads with at least one reported alignment: 2287058 (17.06%)

# reads that failed to align: 11121896 (82.94%)

# Reported 2287058 alignments

# SRR7707754_clean.fq.gz

# reads processed: 13560056

# reads with at least one reported alignment: 2504986 (18.47%)

# reads that failed to align: 11055070 (81.53%)

# Reported 2504986 alignments

cat > align1.bash

index=/zju/phf5a/mirna/reference/EBV.fa

ls *_clean.fq.gz| while read id

do

echo $id

bowtie -p 2 $index $id -S tmp ;

samtools view -bS -@ 2 tmp -o ${id}_ebv.bam

done

###报告如下

# SRR7707744_clean.fq.gz

# reads processed: 13812663

# reads with at least one reported alignment: 540617 (3.91%)

# reads that failed to align: 13272046 (96.09%)

# Reported 540617 alignments

# SRR7707745_clean.fq.gz

# reads processed: 12243879

# reads with at least one reported alignment: 1016984 (8.31%)

# reads that failed to align: 11226895 (91.69%)

# Reported 1016984 alignments

# SRR7707746_clean.fq.gz

# reads processed: 8307240

# reads with at least one reported alignment: 820643 (9.88%)

# reads that failed to align: 7486597 (90.12%)

# Reported 820643 alignments

# SRR7707747_clean.fq.gz

# reads processed: 13017092

# reads with at least one reported alignment: 299324 (2.30%)

# reads that failed to align: 12717768 (97.70%)

# Reported 299324 alignments

# SRR7707748_clean.fq.gz

# reads processed: 13335526

# reads with at least one reported alignment: 831 (0.01%)

# reads that failed to align: 13334695 (99.99%)

# Reported 831 alignments

# SRR7707749_clean.fq.gz

# reads processed: 12855713

# reads with at least one reported alignment: 676615 (5.26%)

# reads that failed to align: 12179098 (94.74%)

# Reported 676615 alignments

# SRR7707750_clean.fq.gz

# reads processed: 13245630

# reads with at least one reported alignment: 206912 (1.56%)

# reads that failed to align: 13038718 (98.44%)

# Reported 206912 alignments

# SRR7707751_clean.fq.gz

# reads processed: 12667220

# reads with at least one reported alignment: 274 (0.00%)

# reads that failed to align: 12666946 (100.00%)

# Reported 274 alignments

# SRR7707752_clean.fq.gz

# reads processed: 13173414

# reads with at least one reported alignment: 231 (0.00%)

# reads that failed to align: 13173183 (100.00%)

# Reported 231 alignments

# SRR7707753_clean.fq.gz

# reads processed: 13408954

# reads with at least one reported alignment: 564 (0.00%)

# reads that failed to align: 13408390 (100.00%)

# Reported 564 alignments

# SRR7707754_clean.fq.gz

# reads processed: 13560056

# reads with at least one reported alignment: 482 (0.00%)

# reads that failed to align: 13559574 (100.00%)

# Reported 482 alignments

###

-n模式與-v模式。

默認的,bowtie採用了和Maq一樣的質量控制策略,設置-n 2 -l 28 -e 70。總的來說,比對模式分為兩種,一種是-n 模式, 一種是-v 模式,而且這兩種模式是不能同時使用的。bowtie默認使用-n模式。

-n模式參數: -n N -l L -e E

其中N,L,E都為整數。-n N代表在高保真區內錯配不能超過N個,可以是0〜3,一般的設置為2。-l L代表序列高保真區的長度,最短不能少於5,對於短序列長度為32的,設置為28就很不錯。-e E代表在錯配位點Phred quality值不能超過E,默認值為40

另一种比对

另一种是比对miRBase库的fa文件,网上有比对颈环结构,有比对mature的,还有二者合一比对的。。

cat > align2.bash

index=/zju/phf5a/mirna/reference/mature.fa

ls *_clean.fq.gz| while read id

do

echo $id

bowtie -p 2 $index $id -S tmp ;

samtools view -bS -@ 2 tmp -o ${id}_mature.bam

done

####

cat > align3.bash

index=/zju/phf5a/mirna/reference/hairpin.fa

ls *_clean.fq.gz| while read id

do

echo $id

bowtie -p 2 $index $id -S tmp ;

samtools view -bS -@ 2 tmp -o ${id}_hairpin.bam

done

但是比对率真的好低。。所以还是选用跟文章一直的方法。

counts

这里与文章一直,选用miRBase的gff文件来进行计算。这里我就用featurecounts啦,因为快。

先把bam文件sort一下

cat >sort.bash

ls *_genome.bam| while read id

do

echo $id

samtools sort -@ 2 -o ${id}_sort.bam -T sort -O bam $id

done

###下载hsa.gff文件

nohup wget ftp://mirbase.org/pub/mirbase/CURRENT/genomes/hsa.gff3 &

featurecounts

featureCounts -T 2 -F gff -M -t miRNA -g Name -a $gtf -o all.counts.mature.txt *genome* 1>counts.mature.log 2>&1

featureCounts -T 2 -F gff -M -t miRNA_primary_transcript -g Name -a $gtf -o all.counts.hairpin.txt *genome* 1>counts.hairpin.log 2>&1

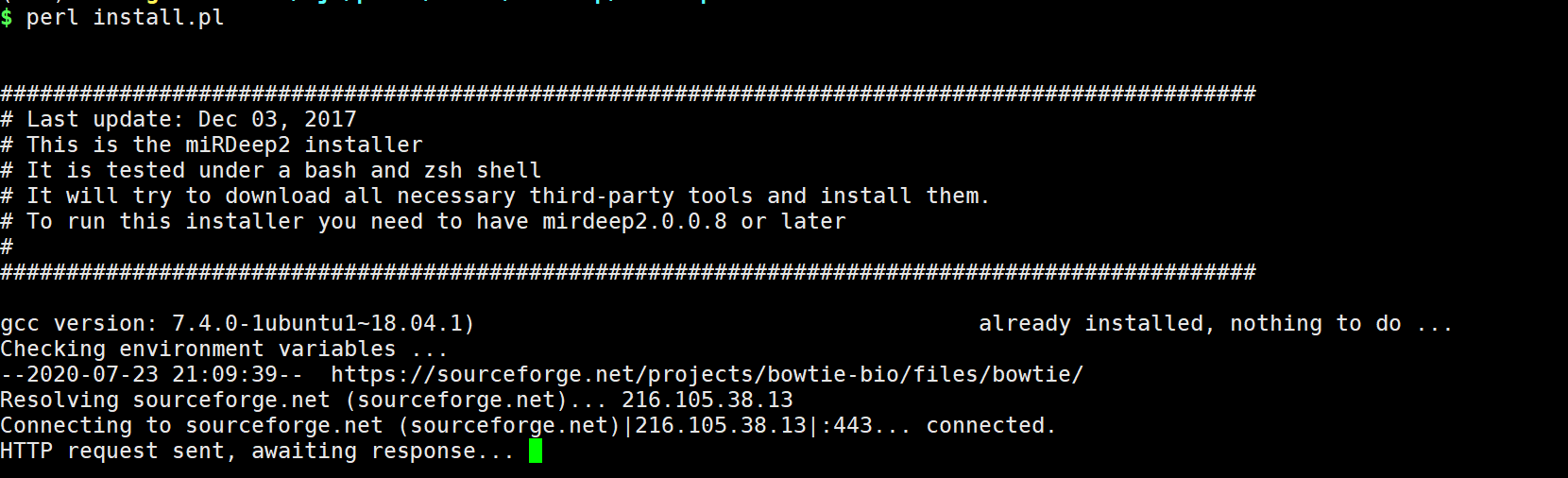

mirdeep2是一个打包好的流程

软件安装如下,可以看到是一个perl程序:

mkdir mirdeep

cd mirdeep/

nohup git clone https://github.com/rajewsky-lab/mirdeep2.git &

###安装mirdeep,按照流程安装即可,会自动运行

cd mirdeep2

perl install.pl

source ~/.bashrc

perl install.pl

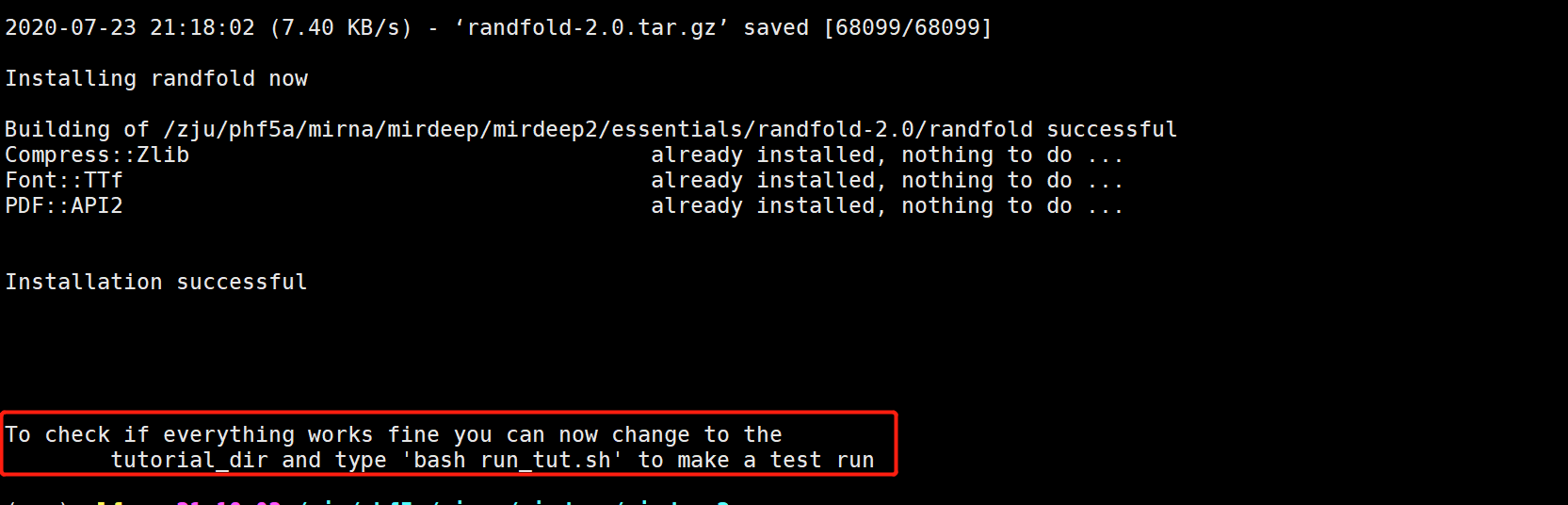

而且软件也很人性化,完成后可以用给的例子进行检查是否安装正确

##测试

cd tutorial_dir/

bash run_tut.sh

没有问题,就可以用自己的数据来做了。

参考基因组建立索引

这里就用hg19的bowtie索引就行。

比对序列

将物种fa序列比对到基因组

conda activate rna

cd /zju/phf5a/mirna/mirdeep

mapper.pl reads.fa -e -j -k TCGTATGCCGTCTTCTGCTTGT -l 18 -m -p cel_cluster \

-s reads_collapsed.fa -t reads_collapsed_vs_genome.arf -v ###因为写在路径了,直接可以调用,参数含义c,表示输入是fa;j表示移除ATCGUNatcgun以外的字符;k表示去除3‘街头序列;l表示最小长度,默认18;-m 合併相同的reads;p表示所索引文件;s表示输出的fa;t表示输出后的比对文件 v表示输出进度报告

但是这里就有2个问题了,

- 为什么还要再比对一次。。明明都比对过了

- 接头我也不知道,懒得去查序列了。

后来搜索才发现,原来其实开发者也想到了这一点,可以直接用比对后的sam文件! 用百度搜mirdeep2,永远都是最简单而且一摸一样的example,所以能读一手文献别读二手文献是很有道理的

另外官网也给出了如何批量比对的方法

综上所述,理解一个软件最好的方式还是去官网读文档!

所以可以直接用比对后的bam来进行mapping

conda activate rna

cd /zju/phf5a/mirna/1.raw

cat > mirdeep.bash

ls *_clean.fq.gz_genome.bam| while read id

do

echo $id

samtools view -h -@ 2 -o ${id%%.*}.sam $id ;

perl /zju/phf5a/mirna/mirdeep/mirdeep2/bin/bwa_sam_converter.pl -i ${id%%.*}.sam -c -o ${id%%.*}_collapsed.fa -a ${id%%.*}.arf

done

结果如下

ls -lh *.fa|cut -d " " -f 5-

# # 21M Aug 5 10:03 SRR7707744_clean_collapsed.fa

# # 21M Aug 5 10:04 SRR7707745_clean_collapsed.fa

# # 19M Aug 5 10:05 SRR7707746_clean_collapsed.fa

# # 21M Aug 5 10:07 SRR7707747_clean_collapsed.fa

# # 21M Aug 5 10:08 SRR7707748_clean_collapsed.fa

# # 27M Aug 5 10:09 SRR7707749_clean_collapsed.fa

# # 21M Aug 5 10:11 SRR7707750_clean_collapsed.fa

# # 17M Aug 5 10:12 SRR7707751_clean_collapsed.fa

# # 18M Aug 5 10:13 SRR7707752_clean_collapsed.fa

# # 21M Aug 5 10:15 SRR7707753_clean_collapsed.fa

# # 22M Aug 5 10:16 SRR7707754_clean_collapsed.fa

ls -lh *.arf|cut -d " " -f 5-

# 8.8M Aug 5 10:04 SRR7707744_clean.arf

# 14M Aug 5 10:05 SRR7707745_clean.arf

# 15M Aug 5 10:06 SRR7707746_clean.arf

# 12M Aug 5 10:07 SRR7707747_clean.arf

# 13M Aug 5 10:09 SRR7707748_clean.arf

# 17M Aug 5 10:10 SRR7707749_clean.arf

# 15M Aug 5 10:11 SRR7707750_clean.arf

# 8.7M Aug 5 10:13 SRR7707751_clean.arf

# 7.5M Aug 5 10:14 SRR7707752_clean.arf

# 12M Aug 5 10:15 SRR7707753_clean.arf

# 13M Aug 5 10:17 SRR7707754_clean.arf

一个样本一分钟左右比对完成,结果会生成一个fa文件,一个比对信息文件arf,打开来看看

cat SRR7707744_clean_collapsed.fa|head

# >seq_1_x1

# AGGGACGGCGGAGCGAGCGCAGATCGG

# >seq_2_x1

# AGGTAACGGGCTGGGCCATAGATCGGA

# >seq_3_x1

# TCCCGCACTGAGCTGCCCCGAGAGATC

# >seq_4_x3

# TCTTTGGTTATCTAGCTGTATGACATC

# >seq_5_x1

# GGCGGCGGCAGTCGGCGGGCGGCCGAT

###就是可能的mirna序列

cat SRR7707744_clean.arf|head

# seq_334099_x26461 27 1 27 gcgggtgatgcgaactggagtctgagc chr17 27 62223486 6222351gcgggtgatgcgaactggagtctgagc + 0 mmmmmmmmmmmmmmmmmmmmmmmmmmm

# seq_158294_x49326 27 1 27 gcattggtggttcagtggtagaattct chr17 27 8029064 8029090 gcattggtggttcagtggtagaattct + 0 mmmmmmmmmmmmmmmmmmmmmmmmmmm

# seq_247582_x2 27 1 27 cccccccctgctaaatttgactggctt chr2 27 200648064 200648090 ccccccactgctaaatttgactggctt - 1 mmmmmmMmmmmmmmmmmmmmmmmmmmm

# seq_307490_x942864 27 1 27 tagcttatcagactgatgttgacagat chr17 27 57918634 # 5791866tagcttatcagactgatgttgactgtt + 2 mmmmmmmmmmmmmmmmmmmmmmmMmMm

# seq_326711_x29714 27 1 27 ttacttgatgacaataaaatatctgat chr1 27 173833290 #173833316 ttacttgatgacaataaaatatctgat - 0 mmmmmmmmmmmmmmmmmmmmmmmmmmm

# seq_155576_x60123 27 1 27 tgagaactgaattccataggctgagat chr10 27 104196277 104196303 tgagaactgaattccataggctgtgag + 2 mmmmmmmmmmmmmmmmmmmmmmmMmmM

# seq_230857_x1 27 1 27 gggagttcgagaccagcctggtagatc chr1 27 27327656 27327682 aggagttcgagaccagcctgggagatc + 2 MmmmmmmmmmmmmmmmmmmmmMmmmmm

# seq_282822_x50 27 1 27 cttctcactactgcacttgaagatcgg chr4 27 147630062 147630088 cttctcactactgcacctgaagatggg + 2 mmmmmmmmmmmmmmmmMmmmmmmmMmm

# seq_119371_x1465 27 1 27 gctccgtgaggataagtaactctgagg chr11 27 62623040 6262306gctccgtgaggataaataactctgagg - 1 mmmmmmmmmmmmmmmMmmmmmmmmmmm

# seq_42088_x745 27 1 27 gaggtgtagaataagtgggaggccccc chr7 27 68527681 68527707 gaggtgtagaataagtgggaggccccc + 0 mmmmmmmmmmmmmmmmmmmmmmmmmmm

arf则更为详细的信息,包括序列名;长度;起始;终止;序列;map到哪条染色体;map到染色体的长度;map到染色体的起始终止位置;染色体上的序列;正负义链;错配数;如果有错配用M,否则就用m

找novel miRNA并定量

这里需要把人类已发现的miRNA序列从总库里找出来,这里直接用Jimmy老师写好的perl代码就可以了。

#####

perl -alne '{if(/^>/){if(/Homo/){$tmp=1}else{$tmp=0}};next if $tmp!=1;s/U/T/g if !/>/;print }' hairpin.fa > hairpin.human.fa

perl -alne '{if(/^>/){if(/Homo/){$tmp=1}else{$tmp=0}};next if $tmp!=1;s/U/T/g if !/>/;print }' mature.fa > mature.human.fa

####

perl -alne '{if(/^>/){if(/Homo/){$tmp=0}else{$tmp=1}};next if $tmp!=1;s/U/T/g if !/>/;print }' mature.fa > mature.others.fa

虽然不会perl,但是也可以才出来含义,所以也可以把其他物种的分离,只需要0 1 对换即可

然后批量运行perl脚本就可以了

cat >nover.bash

ls *.arf| while read id

do

echo $id

perl /zju/phf5a/mirna/mirdeep/mirdeep2/bin/miRDeep2.pl ${id%%.*}_collapsed.fa ../reference/hg19.fa $id ../reference/mature.human.fa ../reference/mature.others.fa ../reference/hairpin.human.fa -t Human 2>report.log

done

但是遇到了报错 ,看了看是格式问题,所以就改成它的格式即可:

cat hairpin.fa|grep '>'|awk '{print $3,$4}'|head

awk '{if (substr($0, 1, 1) == ">") print $1; else print $0}' mature.human.fa > mature.human_1.fa

awk '{if (substr($0, 1, 1) == ">") print $1; else print $0}' mature.human.fa > mature.human_1.fa mature.others.fa > mature.others_1.fa

awk '{if (substr($0, 1, 1) == ">") print $1; else print $0}' hairpin.human.fa > hairpin.human_1.fa

然后再批量运行即可

cat >nover.bash

ls *.arf| while read id

do

echo $id

perl /zju/phf5a/mirna/mirdeep/mirdeep2/bin/miRDeep2.pl ${id%%.*}_collapsed.fa ../reference/hg19.fa $id ../reference/mature.human_1.fa ../reference/mature.others_1.fa ../reference/hairpin.human_1.fa -t Human 2>report.log

done

运行完成后会生成一个叫 expression_analyses的文件夹, 其中一个结果如下

ls -lh |cut -d " " -f 5-

# 150 Aug 6 08:04 bowtie_mature.out

# 154 Aug 6 08:04 bowtie_reads.out

# 83K Aug 6 08:04 mature2hairpin

# 100K Aug 6 08:04 mature.converted

# 345K Aug 6 08:04 mature.human_1.fa_mapped.arf

# 239K Aug 6 08:04 mature.human_1.fa_mapped.bwt

# 253K Aug 6 08:04 miRBase.mrd

# 89K Aug 6 08:04 miRNA_expressed.csv

# 47K Aug 6 08:04 miRNA_not_expressed.csv

# 4.1M Aug 6 08:04 miRNA_precursor.1.ebwt

# 20K Aug 6 08:04 miRNA_precursor.2.ebwt

# 17K Aug 6 08:04 miRNA_precursor.3.ebwt

# 39K Aug 6 08:04 miRNA_precursor.4.ebwt

# 4.1M Aug 6 08:04 miRNA_precursor.rev.1.ebwt

# 20K Aug 6 08:04 miRNA_precursor.rev.2.ebwt

# 182K Aug 6 08:04 precursor.converted

# 22K Aug 6 08:04 precursor_not_expressed.csv

# 14K Aug 6 08:04 read_occ

# 4.5K Aug 6 08:04 rna.ps

# 21M Aug 6 08:04 SRR7707753_clean_collapsed.fa.converted

# 118K Aug 6 08:04 SRR7707753_clean_collapsed.fa_mapped.arf

# 86K Aug 6 08:04 SRR7707753_clean_collapsed.fa_mapped.bwt

最重要的就是miRNA_expressed.csv这个文件了,就是known和novel的counts数,可惜的是没有找到未知的miRNA,可能之前找到的已经存入库里吧

less miRNA_expressed.csv|head

#miRNA read_count precursor

# hsa-let-7a-5p 132 hsa-let-7a-1

# hsa-let-7a-3p 0 hsa-let-7a-1

# hsa-let-7a-5p 10095 hsa-let-7a-2

# hsa-let-7a-2-3p 0 hsa-let-7a-2

# hsa-let-7a-5p 8 hsa-let-7a-3

# hsa-let-7a-3p 0 hsa-let-7a-3

# hsa-let-7b-5p 190 hsa-let-7b

# hsa-let-7b-3p 0 hsa-let-7b

# hsa-let-7c-5p 1642 hsa-let-7c

将所有的表达文件作为一个文件

###改名

cd expression_analyses/

ls -lh|cut -d " " -f 10 > name.txt #输入到name文件

###写入脚本

cat name.txt |while read id

do

echo "mv ${id}/miRNA_expressed.csv ${id}/${id}miRNA_expressed.csv"

done > rename.command

cat rename.command

bash rename.command

###移动所有csv到一个文件夹

mkdir all

cat name.txt |while read id

do

echo "cp ${id}/${id}miRNA_expressed.csv ./all"

done > gathe.command

cat gathe.command

bash gathe.command

###去除文本开头的”#"

sed -i 's/#//g' *.csv

通过R来进行整合

差异分析

这里有个问题,文章只有10个样品的数据(6个NPC病人加上4个正常组织),但是上传的库竟然有11个数据?

难道是有一个数据被上传两次?

对于这样的诡异,我也不知道说什么好!

=-= 哈哈哈

额,不管了,就按照7 4 分组吧。按照文章所给的阈值做DEG分析

rm(list=ls())

options(digits = 4)

library(purrr)

a=list.files("./all","csv")

setwd("./all")

counts=a %>% map(function(x) read.csv(x,sep = "",header = T)) %>% reduce(cbind)

counts=counts[,c(1,seq(2,33,3))]

###

library(edgeR)

group=c(rep("NPC",7),rep("Control",4))

###用中位数去重

table(table(counts$miRNA))

#1 2 3 4 5 6 11

#2431 154 37 17 8 8 1

# 对重复基因名取平均表达量,然后将基因名作为行名

exprSet = as.data.frame(floor(avereps(counts[,-1],ID = counts$miRNA)))

###limma

library(limma)

group_list=as.factor(group)

expr=exprSet

design <- model.matrix(~0+group_list)

colnames(design)=levels(group_list)

rownames(design)=colnames(expr)

dge <- DGEList(counts=expr)

dge <- calcNormFactors(dge)

v <- voom(dge,design, normalize="quantile")

fit <- lmFit(v, design)

constrasts = paste(rev(levels(group_list)),collapse = "-")

cont.matrix <- makeContrasts(contrasts=constrasts,levels = design)

fit2=contrasts.fit(fit,cont.matrix)

fit2=eBayes(fit2)

DEG = topTable(fit2, coef=constrasts, n=Inf)

DEG = na.omit(DEG)

#logFC_cutoff <- with(DEG,mean(abs(logFC)) + 2*sd(abs(logFC)) )

logFC_cutoff <- 1.2

DEG$change = as.factor(

ifelse(DEG$P.Value < 0.05 & abs(DEG$logFC) > logFC_cutoff,

ifelse(DEG$logFC > logFC_cutoff ,'UP','DOWN'),'NOT')

)

head(DEG)

table(DEG$change)

#DOWN NOT UP

#12 2629 15

library(tinyarray)

cg1 = rownames(DEG)[DEG$change !="NOT"]

h1=draw_heatmap(expr[cg1,],group_list,scale_before = T,cluster_cols = T,annotation_legend = T)

v1=draw_volcano(DEG,pkg = 3,logFC_cutoff = 1.2)

library(patchwork)

h1+v1+plot_layout(guides = 'collect')

与原文差异巨大,可能原因是我做mirdeep并没有对病毒基因组进行mapping导致的,但是方法是没有问题。

总结

可以看到,不同的上游处理mirseq数据,下游结果差距也比较大。